Thread setting plug gages are key instruments in manufacturing and quality control. These tools check the accuracy and dimensions of internal threads in parts, making sure they fit the specified tolerance ranges. By ensuring threads are within the correct limits, manufacturers can avoid problems in assembly, maintain product quality, and prevent costly rework. In manufacturing, thread imperfections can lead to poor assembly or even product failures. Thread plug gages help avoid these issues by offering a straightforward method of testing thread sizes.

- Air Gages

- Bore gages

- Calipers

- Calibration Equipment

- CMMs Coordinate Measuring

- CMM Sensors, Probes & Styli

- Computed Tomography – CT Scanners

- Concentricity Gages

- Contour Measurement

- CUSTOM GAGES & FIXTURING

- CYLINDRICAL GO / NO GO / MASTERS

- Data Collection & SPC

- Depth Gages

- Fixtures & Part Holding CMM, Vision, Optical

- Force & Torque Gages

- Gage Blocks

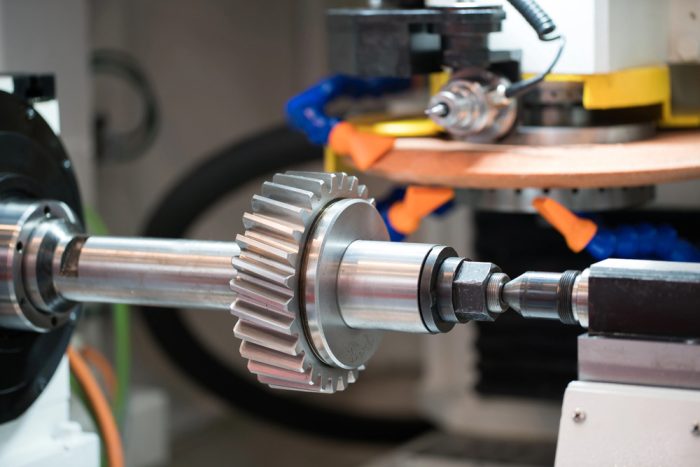

- Gear & Spine Measurement

- GRANITE SURFACE PLATES, SQUARES & PARRALLELS

- HARDNESS TESTERS

- Height Gages

- HEX, HEXALOBE GAGES & SQUARE GAGES

- ID / OD GAGES & SNAP GAGES

- Indicators & Comparator Stands

- Laser Micrometers

- Layout and Shopfloor

- MICROMETERS

- MICROSCOPES & BORESCOPES

- Optical Comparators, Overlay Charts and Readouts

- Robotics and Automation

- ROUNDNESS & FORM

- SHAFT MEASUREMENT

- Structured Light 3D Analysis

- Surface Roughness Testers

- Thread Gages

- Video Measuring Machines

Blog

GD Recognizes Willrich As Strategic Partner

Understanding Thread Plug Classes

Thread plug gages are crucial for verifying the functionality of threaded holes in industrial operations. They measure the pitch diameter and ensure the accuracy of internal threads. The different thread plug classes offer varying tolerances, each designed to serve specific needs in manufacturing processes. Understanding the differences between these classes is vital for selecting the right gage for each application.

Tips For Buying Thread Setting Plugs

In manufacturing, choosing the right thread-setting plug is essential. These simple tools check the accuracy of internal threads in components, verifying that everything fits as it should. A small error in thread size can lead to significant production issues, making selecting a thread-setting plug gage a critical decision. This guide will discuss vital tips for choosing the right thread-setting plugs for your needs.

Thread Setting Plug: Product Highlight

Thread setting plugs are tools used to adjust or reset thread ring gages to the correct size. They play a key role in maintaining the accuracy and performance of thread gages. These plugs are particularly valuable for professionals who need to verify that their gaging equipment is functioning at optimal standards. A thread setting plug helps maintain precision in measurements by checking for wear and making adjustments as needed. They are commonly used in the aerospace, automotive, and machining industries.

Thread Plug Vs. Ring Gage: What Is The Difference?

Thread gages are precision tools used in various industries to check the accuracy of threaded components. In manufacturing, these gages play a significant role in confirming that components meet strict standards and tolerances. Two common types of thread gages are the thread plug gage and the thread ring gage. While both are vital in verifying the integrity of threads, they serve distinct purposes and are used in different ways. Understanding the difference between thread plug vs ring gage is essential for anyone working with threaded parts in industries such as aerospace, automotive, and manufacturing.

Thread Plug Size Chart: A Guide To Thread Dimensions

Thread plug gages play a significant role in verifying the accuracy of threaded components in various industries, from aerospace to automotive. A thread plug size chart is a practical tool for understanding thread dimensions. It helps users identify the correct thread size and tolerance necessary for a precise fit. This article will discuss the importance of thread plug gages, how to read the size chart, and their relevance in manufacturing and quality control.

Setting Plug Vs. Working Plug: Is There A Difference?

When it comes to precision gaging in manufacturing, the terms setting plug and working plug are often used, but they serve very different roles. Understanding the distinction between these two types of thread plug gages can make a significant difference in ensuring measurement accuracy and overall production quality.

Revolutionizing Bore Diameter Measurement With In-Process Automation And Robotics

Bore diameter measurement is crucial in the quality assurance of many products in manufacturing industries such as automotive, aerospace, and heavy machinery. Ensuring that a bore fits precisely into its mating part is critical to maintaining product integrity and functionality.

How To Use A Thread Plug Gage Properly

A thread plug gage is a precise tool designed to make sure that threaded holes match the required specifications. Accuracy in using this tool is vital for high-quality manufacturing and machining. Improper use not only leads to inaccurate measurements but can also damage the gage or the threaded hole. Understanding the best practices for using a thread plug gage can save time, prevent errors, and extend the lifespan of your equipment.

How To Properly Care For Your Go Setting Thread Plug

When it comes to maintaining the precision of your thread plug gages, understanding their care is just as important as knowing how to use them. A Go Setting Thread Plug Gage ensures the threaded components fit correctly. Proper maintenance can extend the life of your gage and guarantee consistent, reliable measurements. Misuse or poor handling can lead to inaccurate readings, causing a domino effect that impacts your entire production process.

How Do You Gage NPT Threads?

When working with pipe threads, achieving accuracy is vital. The National Pipe Tapered (NPT) threads are commonly used in plumbing and industrial applications, and gauging them requires specialized tools. Master thread plugs play a significant role in determining if a threaded connection fits correctly. These plugs serve as standards for the dimensions and angles of NPT threads. Understanding how to use them ensures that pipes fit snugly and securely, preventing leaks and other costly issues.

Go Vs. No-Go Thread Plug: What’s The Difference?

Thread plugs are essential for verifying that threaded components fit correctly and function as intended. These tools measure the internal threads of parts and certify that they meet the required standards for use. Among the different types of thread plugs, “go” and “no-go” thread plugs are fundamental in determining whether a part is fit for use.

Demystifying Master Set Plug Tolerances

Accurate measurements are vital in manufacturing to ensure product quality and consistency. Master set plug tolerances are key to this process, especially regarding thread gaging. Understanding the differences between these tolerances and how they impact your work can help you make more informed decisions about your manufacturing processes.

A Guide To Setting Thread Ring Gages To Your Setting Plug

Setting a thread ring gage to a setting plug is a key process for making accurate measurements for various manufacturing operations. Precision in thread gage calibration can impact the functionality of evaluative machinery components. One of the most effective ways to achieve this accuracy is to use a truncated setting plug gage, which serves as a reference point for the thread ring gage. The following guide will walk you through the process, verifying that the pitch diameter settings are aligned correctly.